توظيف برمجيات الترجمة 2023-2024

Topic outline

-

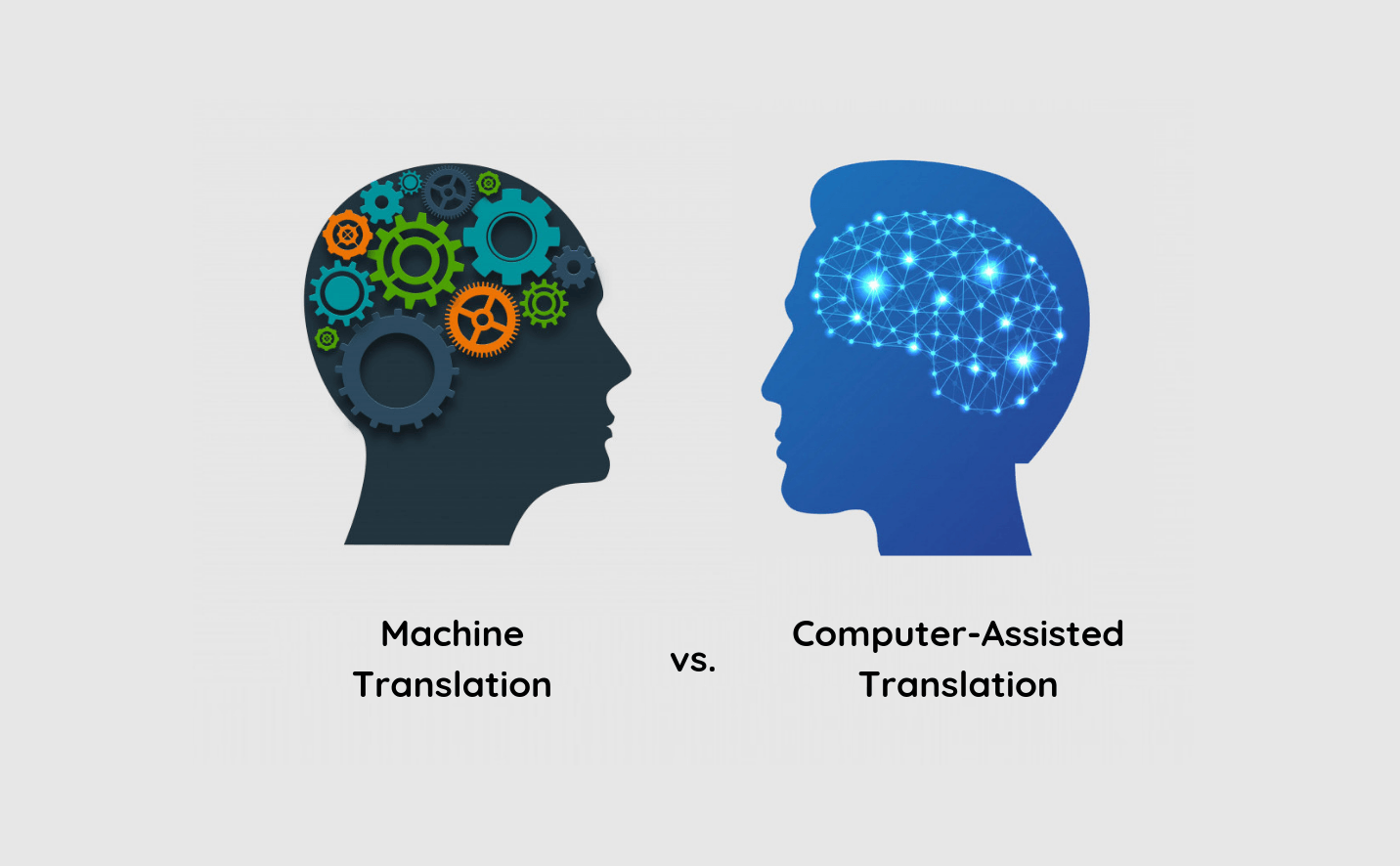

CAT tools and Machine Translation

CAT tools and Machine TranslationThis course is designed for 1st year translation students. It focuses on exploring Machine translation and CATtools (Computer-assisted translation). Students need to understand the importance of these tools in the modern world and how to use them.

Professor: Hadjer SACI

Specialty: Translation and Interpretation

Target audience: 1st Year Translation Students

Time: 1.30h/Week

Email: hadjer.saci@univ-msila.dz

-

Objectives:

This course aims to: -

Workshop

Pre-requisites:

Good computer skills are important. Otherwise, the students are required to develop their skills in using computer software because this course focuses on CAT software.

Basic level in translation. -

Opened: Friday, 3 May 2024, 12:00 AMDue: Friday, 10 May 2024, 12:00 AM

First, students are invited to look for more information about the historical overview of Machine translation/CAT tools and their developments.

-

Objectives:

This course aims to: -

Workshop

Pre-requisites:

Good computer skills are important. Otherwise, the students are required to develop their skills in using computer software because this course focuses on CAT software.

Basic level in translation. -

Opened: Friday, 3 May 2024, 12:00 AMDue: Friday, 10 May 2024, 12:00 AM

First, students are invited to look for more information about the historical overview of Machine translation/CAT tools and their developments.

-

-

-

A brief history can explain the main developments of Machine Translation over time. The latter witnessed great achievements and It has been greatly improved. Now, MT can make accurate translations from/into many languages.

History of machine translation.

For war reasons, MT's first appearance was in the 50s during the Cold War, as the United States was afraid of the development of the Soviet Union. The Americans believed that if they could translate Soviet scientific documents, they would have been able to know the orientations of the Soviets and preceded them for many inventions and scientific and military discoveries.

Early MT methods predominantly fell into two categories: rule-based and corpus-based. Rule-based machine translation (RBMT) relies on manually written rules and bilingual dictionaries for translation. However, this approach was labor-intensive and challenging to scale for open-domain or multilingual translation.

In 1954, a significant milestone was achieved when Georgetown University, in collaboration with IBM, demonstrated Russian–English MT using the IBM-701 computer. Despite early enthusiasm, funding for MT research dwindled following the 1966 Automatic Language Processing Advisory Committee (ALPAC) report, which cast doubt on the feasibility of MT.

The dominance of RBMT continued until the emergence of corpus-based methods in the 2000s, fueled by the availability of bilingual corpora. Example-based machine translation (EBMT), statistical machine translation (SMT), and neural machine translation (NMT) became the main corpus-based approaches.

EBMT, proposed in the mid-1980s, relied on retrieving similar sentence pairs from bilingual corpora for translation. SMT, introduced in 1990, automated the process of learning translation knowledge from data. Its adoption was initially slow due to the complexity and the prevalence of RBMT. However, SMT gained traction with the release of SMT toolkits like Egypt and GIZA.

Phrase-based SMT methods, introduced in 2003, further improved translation quality and developed open-source systems like "Pharaoh" and "Moses." Google's internet translation service, based on phrase-based SMT, launched in 2006, followed by similar services from Microsoft and Baidu.

NMT, proposed in 2014, marked a significant breakthrough by using end-to-end neural network models for translation. Its rapid deployment online, within a year of its proposal, underscored its effectiveness. Subsequent advancements, including convolutional sequence-to-sequence models and the Transformer model, further enhanced translation quality.

The success of NMT spurred research into improving multilingual translation quality and efficiency. Additionally, simultaneous translation (ST) emerged as a promising direction, with significant progress in speech-to-speech (S2S) translation systems since the 1980s, culminating in open-domain spontaneous translation systems and the establishment of forums like the International Workshop on Spoken Language Translation (IWSLT) in 2004 to promote further development.

Haut du formulaire

The emergence of neural machine translation (NMT) and neural speech recognition has paved the way for new simultaneous translation (ST) systems, aiming to automate simultaneous interpreting by providing near-real-time translations with only a few seconds of delay. Simultaneous interpretation, a mentally demanding task, requires intense concentration and linguistic dexterity, making it challenging for human interpreters. Due to the demanding nature of simultaneous interpretation, there is a limited pool of qualified interpreters globally. Additionally, human interpreters often work in teams and rotate frequently to maintain accuracy and prevent fatigue-induced errors.Moreover, human interpreters face limitations in memory capacity, often resulting in the omission of source content. Hence, there is a pressing need to develop simultaneous MT techniques to alleviate the burden on human interpreters and enhance the accessibility and affordability of simultaneous interpreting services.

In response to this need, early efforts such as Wang et al.'s neural network-based method aimed to segment streaming speech to enhance speech translation quality. Ma et al. proposed a straightforward yet effective "prefix-to-prefix" framework tailored to the simultaneity requirement, enabling controllable latency and reigniting interest in ST within the NLP community.

Since then, major research institutions including Google, Microsoft, Facebook, and Huawei have delved into research in this direction.

-

A brief history can explain the main developments of Machine Translation over time. The latter witnessed great achievements and It has been greatly improved. Now, MT can make accurate translations from/into many languages.

History of machine translation.

For war reasons, MT's first appearance was in the 50s during the Cold War, as the United States was afraid of the development of the Soviet Union. The Americans believed that if they could translate Soviet scientific documents, they would have been able to know the orientations of the Soviets and preceded them for many inventions and scientific and military discoveries.

Early MT methods predominantly fell into two categories: rule-based and corpus-based. Rule-based machine translation (RBMT) relies on manually written rules and bilingual dictionaries for translation. However, this approach was labor-intensive and challenging to scale for open-domain or multilingual translation.

In 1954, a significant milestone was achieved when Georgetown University, in collaboration with IBM, demonstrated Russian–English MT using the IBM-701 computer. Despite early enthusiasm, funding for MT research dwindled following the 1966 Automatic Language Processing Advisory Committee (ALPAC) report, which cast doubt on the feasibility of MT.

The dominance of RBMT continued until the emergence of corpus-based methods in the 2000s, fueled by the availability of bilingual corpora. Example-based machine translation (EBMT), statistical machine translation (SMT), and neural machine translation (NMT) became the main corpus-based approaches.

EBMT, proposed in the mid-1980s, relied on retrieving similar sentence pairs from bilingual corpora for translation. SMT, introduced in 1990, automated the process of learning translation knowledge from data. Its adoption was initially slow due to the complexity and the prevalence of RBMT. However, SMT gained traction with the release of SMT toolkits like Egypt and GIZA.

Phrase-based SMT methods, introduced in 2003, further improved translation quality and developed open-source systems like "Pharaoh" and "Moses." Google's internet translation service, based on phrase-based SMT, launched in 2006, followed by similar services from Microsoft and Baidu.

NMT, proposed in 2014, marked a significant breakthrough by using end-to-end neural network models for translation. Its rapid deployment online, within a year of its proposal, underscored its effectiveness. Subsequent advancements, including convolutional sequence-to-sequence models and the Transformer model, further enhanced translation quality.

The success of NMT spurred research into improving multilingual translation quality and efficiency. Additionally, simultaneous translation (ST) emerged as a promising direction, with significant progress in speech-to-speech (S2S) translation systems since the 1980s, culminating in open-domain spontaneous translation systems and the establishment of forums like the International Workshop on Spoken Language Translation (IWSLT) in 2004 to promote further development.

Haut du formulaire

The emergence of neural machine translation (NMT) and neural speech recognition has paved the way for new simultaneous translation (ST) systems, aiming to automate simultaneous interpreting by providing near-real-time translations with only a few seconds of delay. Simultaneous interpretation, a mentally demanding task, requires intense concentration and linguistic dexterity, making it challenging for human interpreters. Due to the demanding nature of simultaneous interpretation, there is a limited pool of qualified interpreters globally. Additionally, human interpreters often work in teams and rotate frequently to maintain accuracy and prevent fatigue-induced errors.Moreover, human interpreters face limitations in memory capacity, often resulting in the omission of source content. Hence, there is a pressing need to develop simultaneous MT techniques to alleviate the burden on human interpreters and enhance the accessibility and affordability of simultaneous interpreting services.

In response to this need, early efforts such as Wang et al.'s neural network-based method aimed to segment streaming speech to enhance speech translation quality. Ma et al. proposed a straightforward yet effective "prefix-to-prefix" framework tailored to the simultaneity requirement, enabling controllable latency and reigniting interest in ST within the NLP community.

Since then, major research institutions including Google, Microsoft, Facebook, and Huawei have delved into research in this direction.

-

-

-

Lesson

Computer-assisted Translation:

Software programs that help human translators to translate quickly and accurately. It relies on a Database that contains, basically, words, terms, expressions, and sentences. Then, This program suggests similar sentences that the translators have already translated and stocked in the TM.Advantages:

- Saving time (Quick translation)

- Consistency

- Quality-translations

- Personal database (Personalized glossary)

- Accurate TM.

- Respect for file form

-

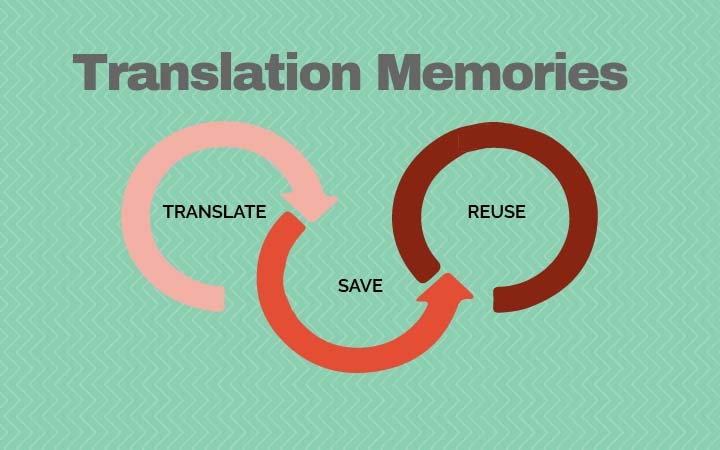

Translation Memory:

It is the most important advantage of CAT tools. It is created automatically once the translator begins his work. So, TM is considered an accurate tool of translation due to human intervention in the whole process.

-

Lesson

Computer-assisted Translation:

Software programs that help human translators to translate quickly and accurately. It relies on a Database that contains, basically, words, terms, expressions, and sentences. Then, This program suggests similar sentences that the translators have already translated and stocked in the TM.Advantages:

- Saving time (Quick translation)

- Consistency

- Quality-translations

- Personal database (Personalized glossary)

- Accurate TM.

- Respect for file form

-

Translation Memory:

It is the most important advantage of CAT tools. It is created automatically once the translator begins his work. So, TM is considered an accurate tool of translation due to human intervention in the whole process.

-

-

-

This video explains why humans can not be eliminated from doing the mission of translation now and in the future.

You are invited to watch the video presented by Alen Melby, Professor of Linguistics and the vice-president of the International Federation of Translation. -

File

Human Translation

Artificial Intelligence

Machine Translation -

This video explains why humans can not be eliminated from doing the mission of translation now and in the future.

You are invited to watch the video presented by Alen Melby, Professor of Linguistics and the vice-president of the International Federation of Translation. -

File

Human Translation

Artificial Intelligence

Machine Translation

-

-

-

AssignmentOpened: Tuesday, 7 May 2024, 12:00 AMDue: Tuesday, 14 May 2024, 12:00 AM

-

AssignmentOpened: Tuesday, 7 May 2024, 12:00 AMDue: Tuesday, 14 May 2024, 12:00 AM

-